TELIN PROJECT: UNDERSTANDING INFANT LAUGHTER TO DEVELOP SOCIALLY INTERACTIVE ROBOTIC AGENTS

View

2 RESEARCH TEACHING POSTS IN COMPUTER SCIENCE AND MECHATRONICS: APPLY BEFORE 29 MARCH!

View

RENEWED DYNAMISM AT ISIR

View

THE INTERNATIONAL FRANCOPHONE CONFERENCE ON HUMAN-COMPUTER INTERACTION TAKES PLACE IN PARIS FROM MARCH 25 TO 29

View

NEUROHCI PROJECT: DEVELOPING IHM MODELS OF USER BEHAVIOR

ViewDiscover ISIR

Our teams composed of researchers from various disciplines, around objects, applications and shared scientific matters within the two major challenges of robotics: interactivity with human persons and autonomy.

The advent of artificial intelligences and robots is inducing profound transformations in our societies. ISIR researchers help to anticipate them by working on the autonomy of machines and their ability to interact with human beings.

Discover all the job, internship, PhD and post-doctorate offers of the Institute for Intelligent Systems and Robotics.

En ce moment

News |

TELIN PROJECT: UNDERSTANDING INFANT LAUGHTER TO DEVELOP SOCIALLY INTERACTIVE ROBOTIC AGENTS

TELIN – The Laughing Infant Laughter, one of the earliest forms of communication in infants, emerges as early as three months of age, well before language, gestures, or walking. Recent studies have highlighted the close link between the acquisition of laughter and advanced cognitive skills, particularly related to understanding…

News |

ÉTUDIANTES EN TERRAIN MINÉ : FILM ET DÉBAT

L’équipe parité-égalité de l’ISIR vous propose la projection du documentaire “Étudiantes en terrain miné” de Charlotte Espel qui aborde les Violences Sexistes et Sexuelles au sein du monde de l’enseignement supérieur et de la recherche avec plusieurs témoignages d’étudiantes et doctorantes. La projection sera suivie d’un débat avec la…

News |

2 RESEARCH TEACHING POSTS IN COMPUTER SCIENCE AND MECHATRONICS: APPLY BEFORE 29 MARCH!

The 2024 recruitment campaign for teacher-researchers at Sorbonne Université is open from 22 February 2024 at 10:00 until 29 March 2024 at 16:00. Applications can only be made via the Galaxie ministerial portal. Among the positions open by the Faculty of Science and Engineering of Sorbonne University, two positions…

News |

THE INTERNATIONAL FRANCOPHONE CONFERENCE ON HUMAN-COMPUTER INTERACTION TAKES PLACE IN PARIS FROM MARCH 25 TO 29

35th International Francophone Conference on Human-Computer Interaction The IHM’24 conference, supported by the Association Francophone d’Interaction Humain–Machine (AFIHM), is the privileged venue for exchanges on the latest advances in research, development and innovation in Human–Computer Interaction. It will take place from 25–29 March 2024 at Sorbonne University, in Paris (France)….

News |

NEUROHCI PROJECT: DEVELOPING IHM MODELS OF USER BEHAVIOR

NeuroHCI project – Multi-scale decision making with interactive systems Neuroscience studies phenomena involving both decision-making and learning in humans, but has received little attention in HCI. The NeuroHCI project is a human-computer interaction (HCI) project that aims to design interactive systems that develop user expertise by establishing a human-machine…

News |

RENEWED DYNAMISM AT ISIR

ISIR is undergoing recent changes at the start of 2024, marked by the arrival of a new management team comprising Stéphane Doncieux* and Mohamed Chetouani**. The incoming management team succeeds Guillaume Morel, professor of robotics, who has held the position of director since 2019. *Stéphane Doncieux, director of ISIR,…

News |

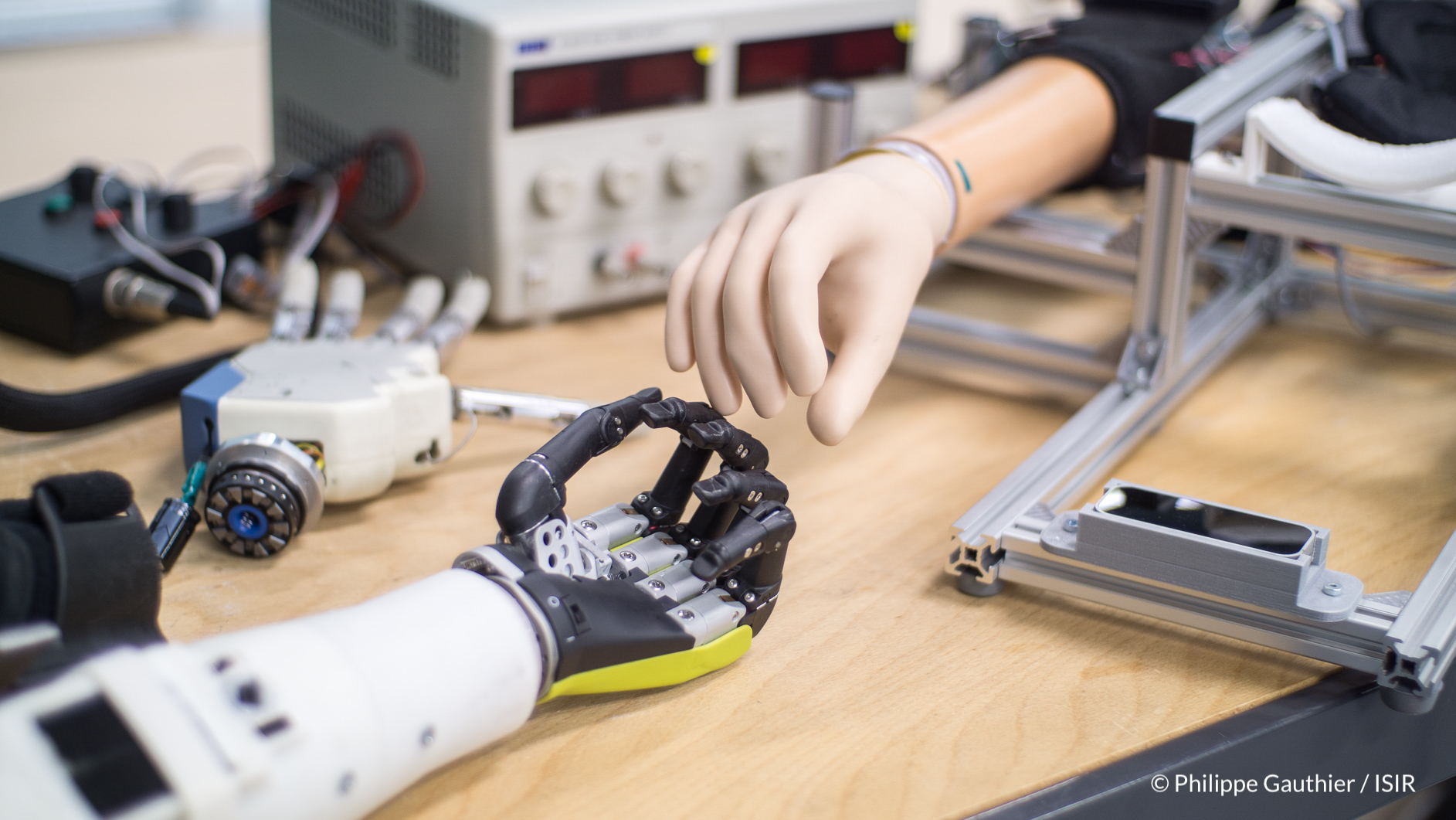

3 ISIR TEAMS PREPARE FOR CYBATHLON 2024 EVENTS

This year, ISIR will be taking part in the Cybathlon with three teams: “A-eye”, “Smart ArM”, and “Smart ArM ROB”. During the competition, these teams will be demonstrating their technological advances and the skills of their drivers in specific everyday tasks. This Friday, 2nd February, two of the teams…

News |

VIRTUAL REALITY IN SURGERY: A STUDY OF THE IMPACT OF IMMERSION ON SURGICAL LEARNING

Source: CNRS Sciences Informatiques As part of her Master 2 in surgical sciences, Eya Jaafar, supervised by Ignacio Avellino, CNRS research fellow at ISIR, and Professor Geoffroy Canlorbe (AP-HP – Sorbonne University), studied the impact of immersive video via virtual reality headsets on surgical learning. Traditional surgical training is…

News |

TRALALAM PROJECT: EXPLORING LARGE LANGUAGE MODELS FOR MACHINE TRANSLATION

Tralalam Project – Translating with Large Language Models Trained on multimodal gigacorpora, language models (LLMs) can be used for a variety of purposes. One possible purpose is machine translation, a task for which the LLM-based approach provides a simple answer to two difficult points: The Tralalam project, accepted under the…

News |

ISIR IS BUILT ON A HUMAN BASE LARGELY FED BY IMMIGRATION

Congratulations to all those who have chosen to come to France and contribute to its development. And above all, thank you. Last April, ISIR invited its International Scientific Advisory Board, made up of some of the world’s leading scientists: Elisabeth André (Universität Augsburg), Antonio Bicchi (Italian Institute of Technology…