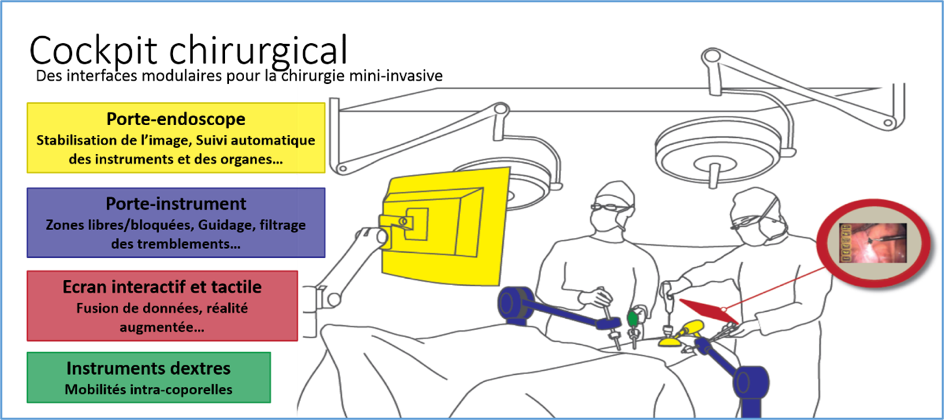

In this project, we propose to work to facilitate gestures in minimally invasive surgery by addressing more particularly the questions of interfaces and human-machine interactions.

Modular interfaces to facilitate minimally invasive surgery

These modules are designed to be fully integrated into the care pathway and current practice. For this, we particularly address the issue of interfaces and surgeon-machine interactions.

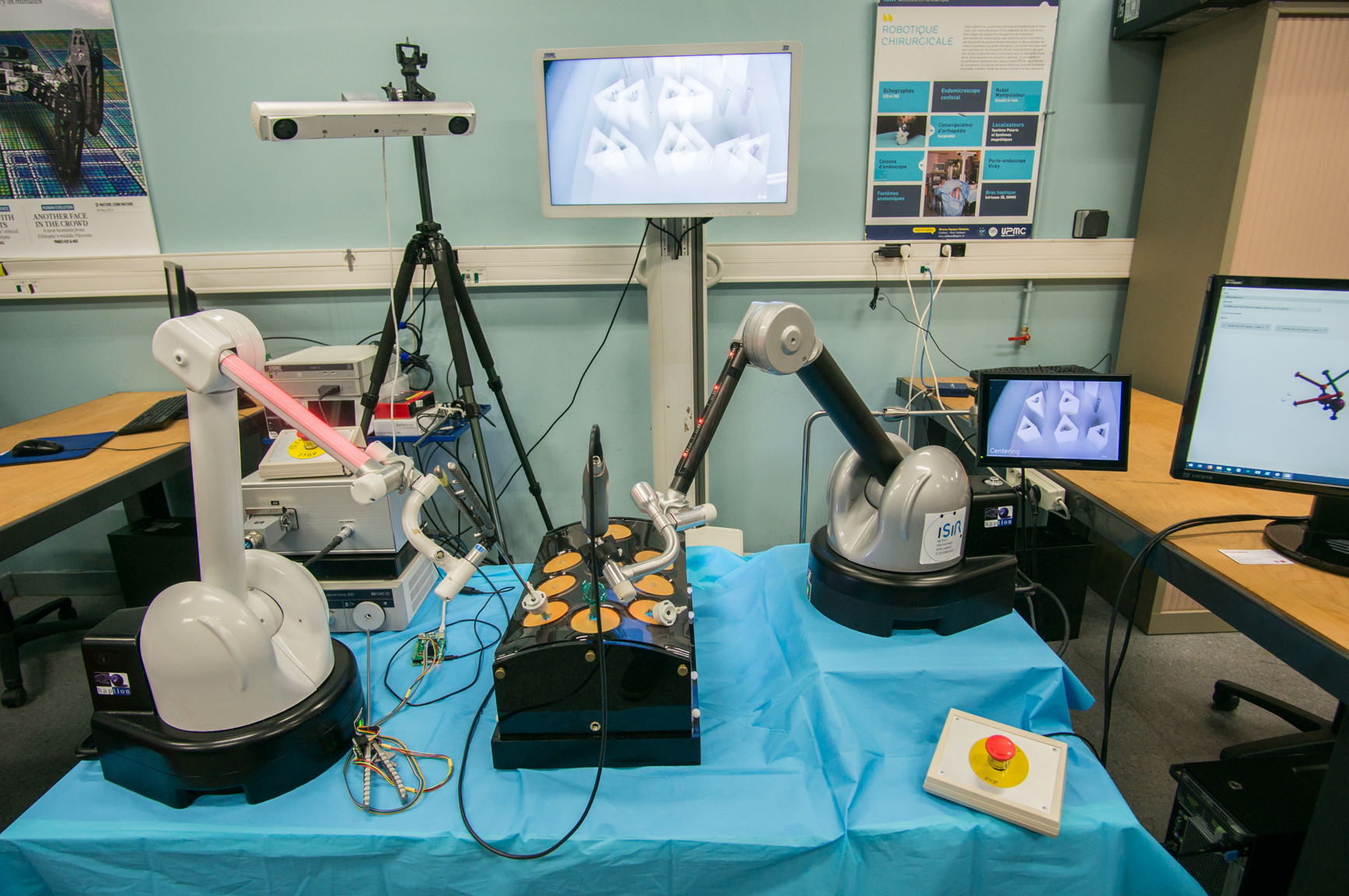

The main research topics are:

– The control of comanipulated instrument-holder robots for gesture assistance (virtual guides),

– Augmented reality (display and manipulation of virtual objects in a real scene, tangible interfaces),

– Surgeon-machine interactions (change of command mode, analysis of learning traces),

– Operating room interactions (observations, interviews, protocols).

The context

Ambulatory surgery allows patients to return home the same day as their operation. This surgery brings many benefits to patients and health professionals alike, in terms of quality of care and organization. Minimally invasive surgery is one of the techniques that allow patients to have a reduced hospitalization time or even to be treated as outpatients.

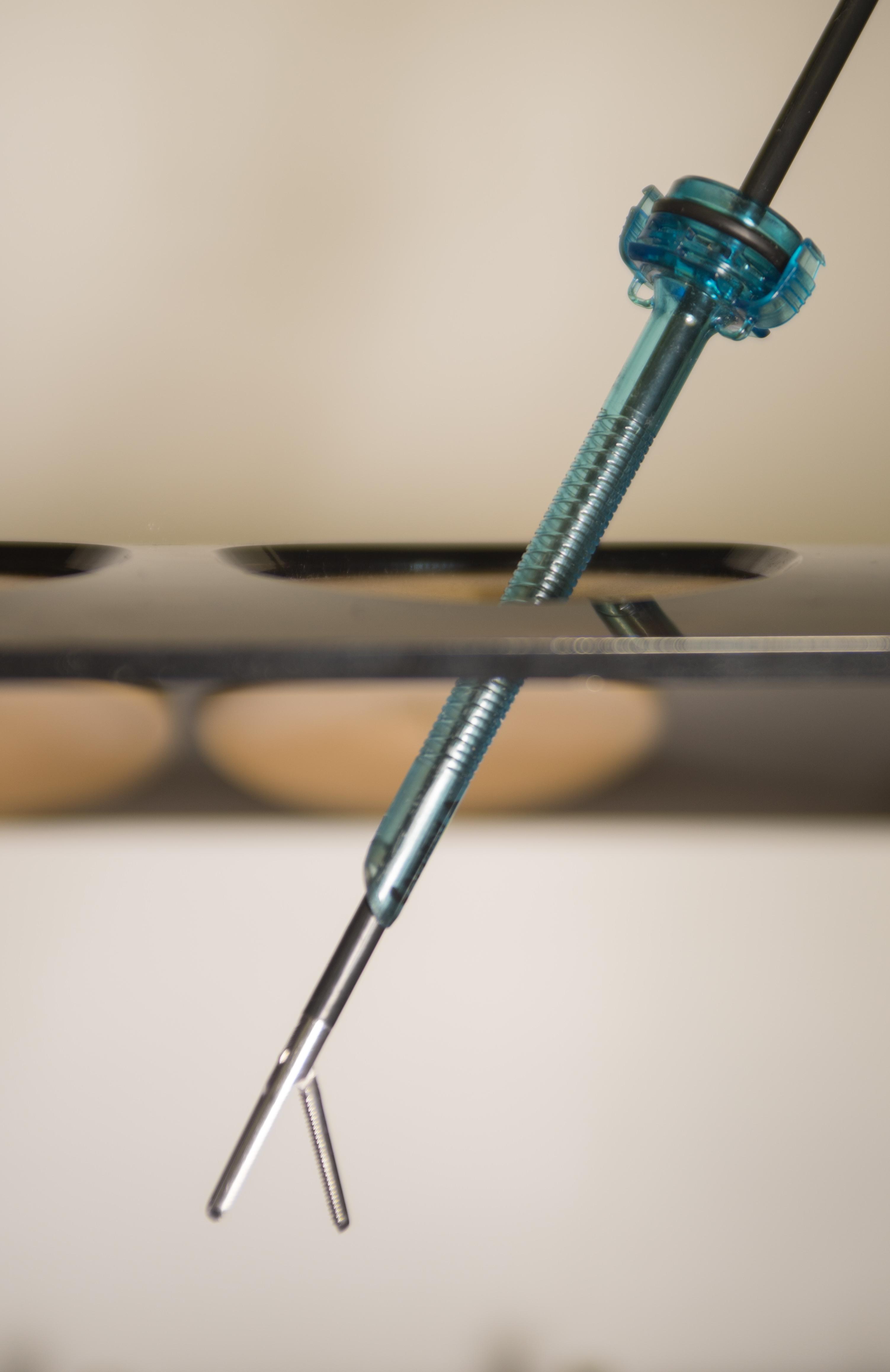

The surgeon makes small incisions (a few millimeters) that allow the introduction of a camera and operating instruments. The handling of the instruments of minimally invasive surgery is complicated and demanding for the surgeon: dexterity is reduced, the field of vision is limited, the perception of the efforts between the organs and the instruments is considerably degraded. All these factors lead to the fact that minimally invasive surgery is underused in clinical practice.

Objectives

The main objective of this project is to democratize minimally invasive surgery by offering surgeons different technological modules that can be combined together. The aim is to assist the surgeon by facilitating the gestures and the perception of the organs so that he operates in a minimally invasive way as easily as in open surgery.