The Audio-touch project approach combines the results of research into social touch and the sonification of movement, making it possible to extract certain significant features from tactile interactions and convert them into sounds. In the project’s experimental studies, participants listening to skin-to-skin audio-touch stimuli accurately associated the sounds with specific gestures and consistently identified the socio-emotional intentions underlying the touches converted into sounds.

In addition, the same movements were recorded with inanimate objects, and further experiments revealed that participants’ perception was influenced by the surfaces involved in tactile interactions (skin or plastic). This audio-touch approach could be a springboard for providing access to the ineffable experience of social touch at a distance, with human or virtual agents.

The context

Touch is the first sensory modality to develop, and social touch has many beneficial effects on the psychological and physiological well-being of individuals. The lack of social touch accentuates feelings of isolation, anxiety and the need for social contact. Given this situation, there is an urgent need to explore ways of recreating the beneficial effects of emotional touch from a distance.

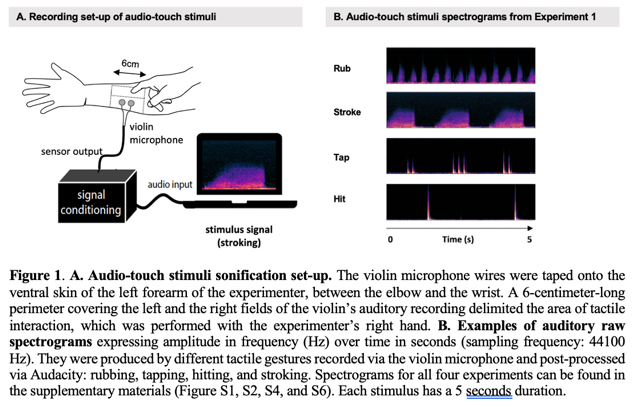

This project was born of this urgency, but also of a fundamental observation: touch and hearing share a common physical basis, that of vibrations. Sonification studies have already shown that it is possible to translate movements and their properties into sounds. It is therefore conceivable to translate certain characteristics of skin-to-skin interactions into perceptible auditory signals. The convergence of this need for a tactile link at a distance and the physical similarities between touch and sound has given rise to the Audio-touch project, which aims to transmit the information of affective touch via sound.

Objectives

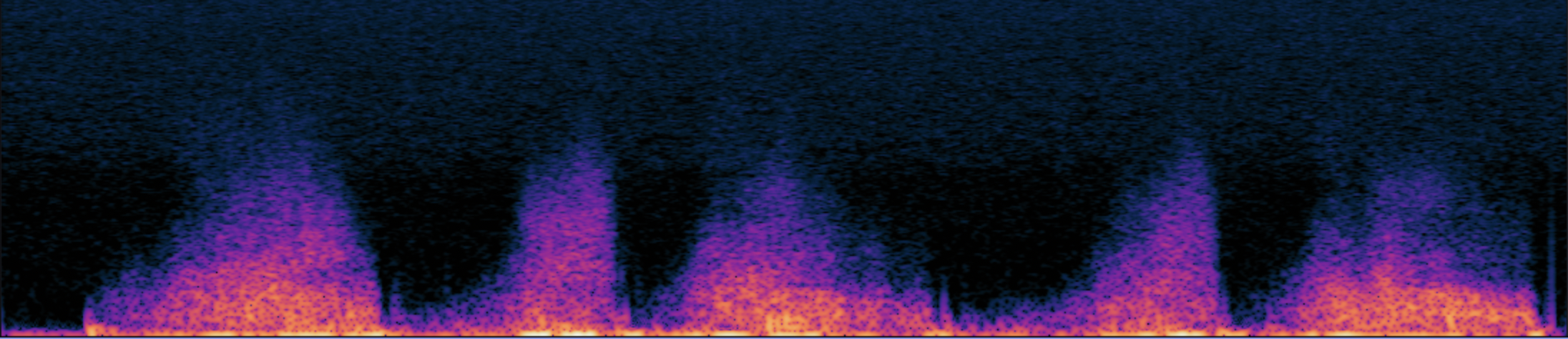

The main objective of this research is to study the possibilities offered by audio-touch: can we convert skin-to-skin touch into sound? More specifically, the team then experimentally determined what social touch information the audio-touch stimuli could communicate to the people listening to them: can we recognise different types of touch gestures in our audio-touch stimuli? can we recognise different socio-affective intentions underlying these sonified touch gestures? does the surface on which the touch takes place (skin versus inanimate object) influence these perceptions?

The results

The four experiments conducted in this study show that social tactile gestures, their socio-emotional intentions and the surface touched (skin vs plastic) can be recognised solely from the audio-touch sounds created. The results show that :

– the participants correctly categorised the gestures (experiment 1),

– participants identified the emotions conveyed (experiment 2),

– their recognition was influenced by the surface on which the touch took place (experiments 3 and 4).

These results open up new perspectives at the intersection of the fields of haptics and acoustics, with the Audio-touch project situated precisely at the crossroads of these two fields, both in terms of its methodology for capturing tactile gestures and its interest in the human perception of sound signals derived from touch. They are also in line with research into human-computer interaction, and suggest that sounds derived from social touch could enrich multisensory experiences, particularly in interactions with virtual social agents.

Partnerships and collaborations

The project is a collaboration between :

– Alexandra de Lagarde, PhD student in the ACIDE team at ISIR,

– Catherine Pelachaud, CNRS research director in the ACIDE team at ISIR,

– Louise P. Kirsch, lecturer at Université Paris Cité, and former post-doctoral student in the Piros team at ISIR,

– Malika Auvray, CNRS research director in the ACIDE team at ISIR.

In addition, for the acoustic aspects of the project, the study benefited from the expertise of Sylvain Argentieri for the recordings and Mohamed Chetouani for the signal processing.

It is also part of the ANR MATCH programme, which explores the perception of touch in interactions with virtual agents, in partnership with HEUDIASYC (UTC) and LISN (Université Paris Saclay).