MAPTICS Project: Developing a Multisensory Framework for Haptics

The MAPTICS project that aims to develop a novel multimodal haptic framework by characterizing how users integrate multisensory cues into a unified perception and which facets of touch are the most essential to reproduce in virtual and digital applications.

To that end, it will investigate the potential of vibro-frictional rendering and multisensory reinforcement to create the illusion of 3D objects on the screen of a novel multimodal haptic interface and explore how haptics can be integrated in the next-generation of multisensory interfaces that combine high-fidelity tactile, auditory and visual feedback. Ultimately, this research it will enable the inception and evaluation of novel multimodal HCI applications for healthy and sensory impaired users.

The context

Current HCI interfaces commonly provide high-resolution visual and auditory feedback. On the opposite, haptic feedback is often absent from displays despite recent commercial products such as the Taptic engine on high-end Apple smartphones. Although multimodal interaction has the potential to enhance the acceptability, improve interaction efficiency, and be more inclusive for sensory impaired people, its development is hampered by the lack of multisensory framework for integrating haptic feedback with audio-visual signals, as well as by the low-fidelity of haptics in comparison to vision and audition in displays.

Consequently, the future of multisensory feedback faces the challenges of the design of high-fidelity haptic feedback and the efficient use of the cognitive principles underlying multisensory perception. In this context, novel rendering strategies and more capable devices integrating rich haptic feedback with the existing high-resolution audio-visual rendering are increasingly considered to optimize the feedback provided to users during their interaction with interfaces.

Objectives

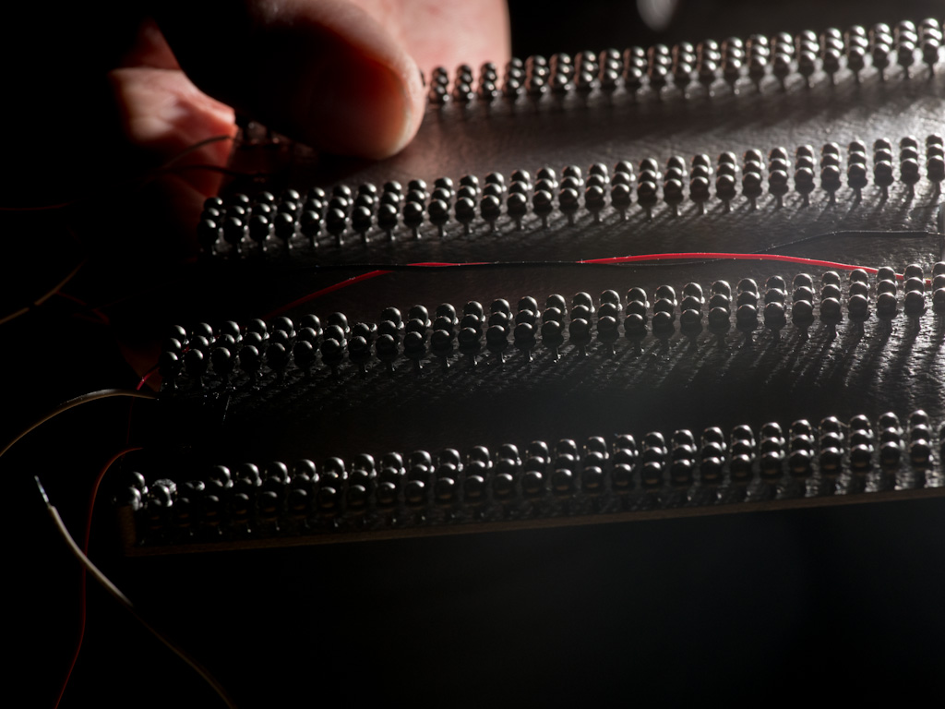

– Develop a large interactive display capable of rendering vibrotactile and force-controlled haptic feedback alongside high-resolution audio-visual capabilities. The challenge will be to create an active touch interface are that provides richer feedback than the current state of the art scientific and commercial devices.

– Developing a realistic framework for multisensory rendering and use it to understand the cognitive processes at play when multisensory input is processed. For this goal, we will use the multisensory platform being developed in MAPTICS to manipulate the congruence between auditory, visual and tactile sensory inputs with the aim to investigate the multimodal reinforcement or disruption of cognitive representations.

– We will leverage the previous two subgoals to build a user interface with high-resolution multisensory reinforced haptic feedback. With this novel interface, we will study interactive scenarios related to the navigation in multisensory interactive maps or to the use of virtual buttons.

The results

Simultaneous vibrotactile stimulation and ultrasonic lubrication of the finger-surface interaction has not been studied yet even though it has the potential to lift serious limitations of current technology such as its inability of ultrasonic vibration to provide compelling feedback when the user gesture does not involve motion of the finger or the difficulty to create virtual shapes with mechanical vibrations.

Understanding better the joint implementation of both types of feedback will empower Haptic and HCI scientists in their research for more efficient and more compelling haptic devices. Also, vibrotactile and frictional haptic rendering are currently separate research topics.

After Maptics, we expect that haptic research will increasingly study these two complementary feedbacks in connection in order to efficiently develop more integrated rendering. In addition, the project will provide a new multisensory experimental tool that will enable a more advanced study and understanding of the underlying mechanisms of sensory integration.

Partnerships and collaborations

The MAPTICS project is an ANR (Agence nationale de la recherche) project, run in partnership with Betty Lemaire-Semail from the L2Ep laboratory at the University of Lille.