This project bridges machine learning and cognitive science to enable better collaboration between humans and robots. The overall goal of OSTENSIVE is to allow robots to perform tasks more effectively in collaboration with humans, by allowing improved communication and understanding between the two. By integrating the human perspective into perception, decision-making, and action generation, we aim to develop models capable of adapting to human mental states and behaviors.

In collaboration with Inria and LAAS, we intend to develop computational forward and inverse models that allow to integrate communication into action, from reasoning and planning to the execution of movements.

The context

When humans demonstrate a task, the demonstrations are directed not just towards the objects that are manipulated, but they are also accompanied by ostensive communicative cues such as gaze and/or modulations of the demonstrations in the space–time dimensions. These behaviors, such as pause, repetition and exaggeration, might appear to be sub-optimal, but they are provided by humans to communicate.

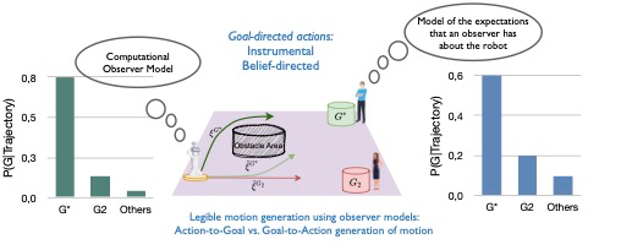

Cognitive Science addresses this challenge of communication in action by drawing inspiration from language (literal meaning – pragmatic inference). Most of the approaches of Human-Robot Interaction assume literal interpretation of behaviors resulting in a strong limitation of interpretation of actions and intentions of the other. There is a need of forward and inverse models being able to generate relevant content to the other (human/robot) and to adequately interpret the actions of the other.

Objectives

The aim of OSTENSIVE is to develop computational forward and inverse models using machine learning based approaches conditioned by reasoning mechanisms about humans. Our research ranges from the development of high-level models for reasoning and task planning to the implementation of low-level motion planning for precise physical execution.

Based on Cognitive Science based approaches, we will investigate situations, indicators and metrics allowing to determine conditions under which humans engage and take benefit from such models by new approaches of real-time evaluation of second-person perspective taking. We will develop new models able to synthesize ostensive and interactive robot motions and probabilistic representations of ostensive actions learned from human demonstrations.

The results

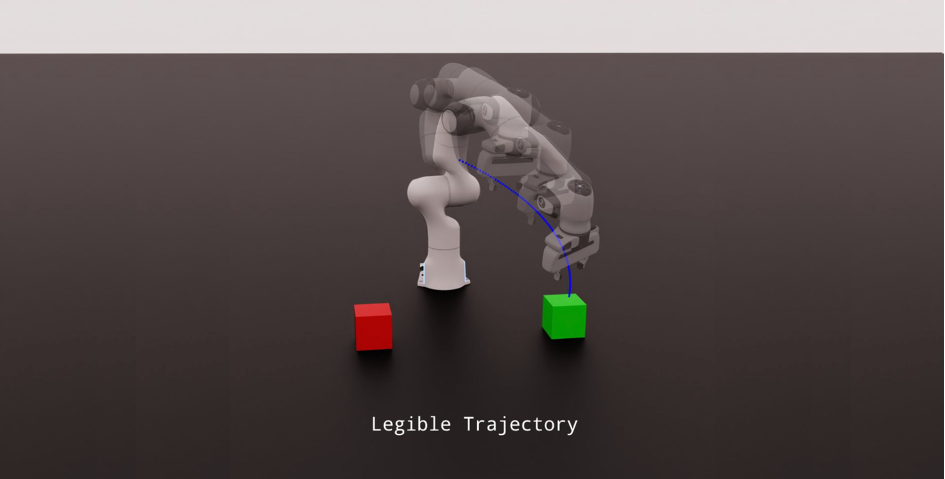

We aim to adapt and extend experimental protocols used to study social cognition. Applications cover many sectors and include social navigation, and mobile manipulation in shared environment, where we want to ensure that robots can navigate fluidly and perform tasks alongside humans in real-world scenarios, by integrating ostensive action into different robotics platforms.

OSTENSIVE’s works, carried out at several levels aims to enable robots to correctly understand and interpret human intentions, to define explainable plans to accomplish tasks, and to perform actions in a readable manner for a human observer.

After ethical approval, we will conduct extensive experiments to validate the ostensive communication skills of the robots with naive participants that should maximally benefit of such skills to accomplish a task with the robot.

Partnerships and collaborations

OSTENSIVE is a collaborative initiative between ISIR, LAAS-CNRS, and INRIA to create a unified framework for human-robot interaction. ISIR is the coordinator of the OSTENSIVE project (ANR-24-CE33-6907-01).

ISIR – ACIDE Team

– Developing forward and inverse inference models, with works on understanding human intent and predicting how an agent’s actions will affect human perception.

– OSTENSIVE Lead : Mohamed Chetouani

INRIA (Nancy) – LARSEN Team

– Investigating ostensive motions at low-level, by leveraging tools such as control primitives and robotic policies.

– OSTENSIVE Lead : Serena Ivaldi

LAAS-CNRS (Toulouse) – RIS Team

– Developing socially aware planning methods, using Theory of Mind and perspective-taking.

– OSTENSIVE Lead : Rachid Alami