FHF : A Frontal Human Following technology for mobile robots

Robots following humans is an effective and practical function, particularly in the context of service robotics. However, the majority of existing research has focused on the robot following the human from behind, with relatively little attention given to the robot operating in front of the human. This project of frontal following, where the robot remains within the user’s field of view, is more reassuring and facilitates interaction. New challenges will arise when developing a tracker that is able to estimate the user’s pose from 2D LiDAR at knee height, especially when the legs frequently occlude each other. There is also a need to ensure the safety of the user while addressing how the robot can keep up with them in situations where it may fall behind.

The context

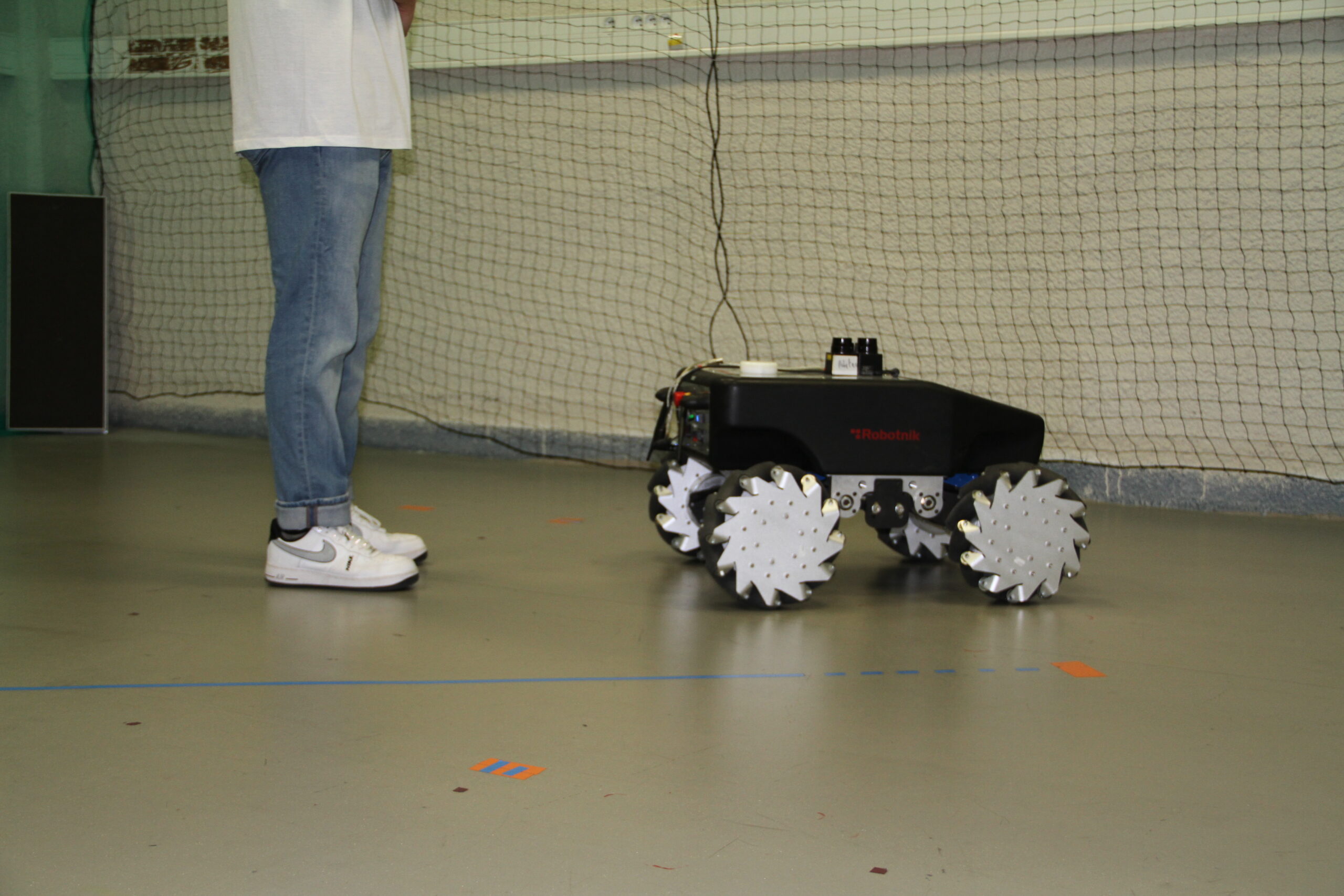

Mobile robots are increasingly ubiquitous in various settings, such as shopping centers, hospitals, warehouses, and factories. Many tasks in these applications are shared between robots and human operators through voice, video, force interaction, etc., either because inherent human expertise or agility is required for certain tasks or because the robot can provide potential assistance to the operator. In this project, we focus on investigating the automatic tracking and following of the user by the mobile robot based on 2D LiDAR. Common rear-following algorithms keep the robot at a certain distance from the user. Some studies have shown that people prefer to see robots in their field of vision, and may feel uncomfortable and unsafe when robots appear behind them. Moreover, some specific services require robots to come in front of users. For example, assistance robots act like guide dogs to provide navigation assistance for the visually impaired. Therefore, frontal following is progressively becoming popular and valuable.

Objectives

The scientific objectives of this project are as follows:

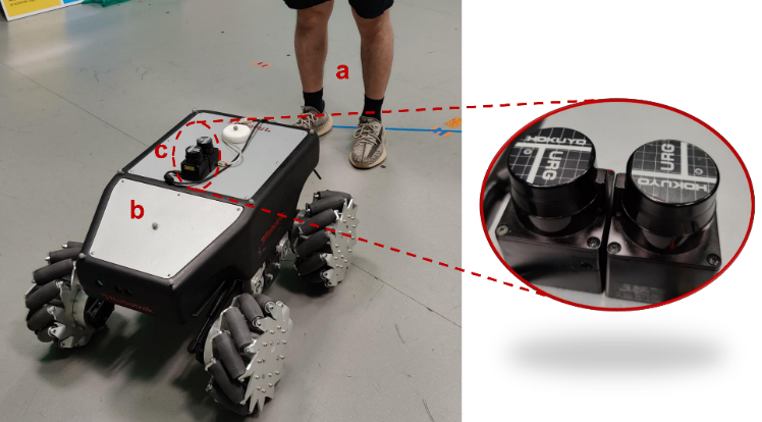

– Build a human pose (orientation and position) tracker based on 2D LiDAR at knee height for mobile robots by studying human gait ;

– Collect LiDAR scans from different volunteers and ground truth data on human orientation to build a data-driven model to improve human orientation estimation ;

– Solve the problem of self-occlusion of legs during scanning by modeling gait or using machine learning techniques ;

– Develop a motion generator to enable the robot to move safely and naturally in front of the user.

The results

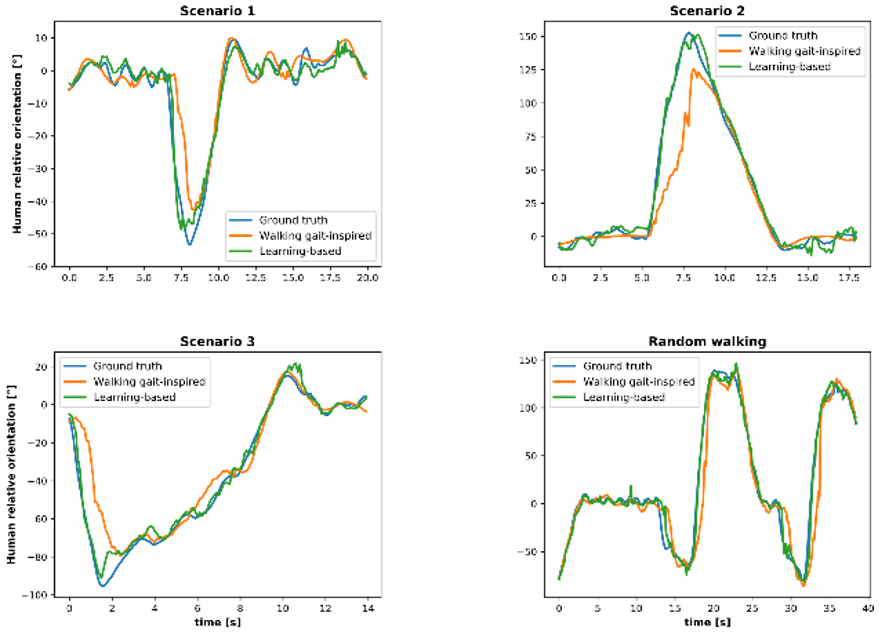

Twelve volunteers (three females and nine males) were invited to participate in the frontal following experiment, and the human pose tracker based on human gait performed well. The quantitative analyses showed that the mean absolute error (MAE) of position was around 4 cm, and the MAE of orientation was less than 12 degrees for complex walking.

Data collected over six hours on five volunteers (one female and four males) were used to build model(s) to improve orientation estimation. By solving the problem of delay, the customized model achieved an MAE of between 4 and 7 degrees for all five volunteers.

The frontal following motion generator enables the robot to come naturally in front of the user, always at a safe distance of one meter during the experiment. For more details, see the video.

Publications

– 2D LiDAR-Based Human Pose Tracking for a Mobile Robot, ICINCO 2023 ;

– Human Orientation Estimation from 2D Point Clouds Using Deep Neural Networks in Robotic Following Tasks, submitted to IROS 2024 ;

– Large Workspace Frontal Human Following for Mobile Robots Utilizing 2D LiDAR, submitted to JIRS.