The Haptivance project aims to create a new generation of tactile surfaces capable of producing sensations of force and movement under the finger. It arose from the observation that current haptic technologies on screens without physical buttons remain imprecise and do not provide true kinesthetic feedback, unlike very expensive robotic devices.

Building on Thomas Daunizeau’s thesis work at ISIR (with Sinan and Vincent Hayward) on acoustic metamaterials, the project aims to structure the propagation of vibrations to localise touch and generate directional sensations similar to physical keys/buttons on completely smooth surfaces.

The development of this technology represents a breakthrough for us in improving medical devices to make them intuitive and safe to use, while also facilitating cleaning and sterilisation by eliminating mechanical buttons that are difficult to clean and prone to the growth of microorganisms in hospital environments.

The context

Haptic feedback is now ubiquitous: smartphones, game consoles, industrial and medical interfaces. However, these systems only generate a general vibration that is impossible to locate precisely and cannot exert a net force on the finger. This severely limits their usefulness in situations where precision is critical: robotic surgery, automotive, accessibility devices. In these environments, users often have to look at the screen to confirm their actions, increasing cognitive load and the risk of error.

Hospital interfaces, for example, still rely heavily on mechanical buttons, which are difficult to sterilise and subject to wear and tear, whereas smooth touch surfaces are better suited to hygiene requirements. However, these screens lack sufficiently informative tactile feedback, forcing caregivers to visually check their actions, thus increasing cognitive load and the risk of error. Existing alternatives remain costly, complex or incompatible with screens. It is particularly in this context that there is a strong need for rich, localised touch that is compatible with interactive surfaces.

Objectives

The main objective of the project for the coming year is to develop a functional demonstrator of an interactive surface that produces localised and directional tactile feedback.

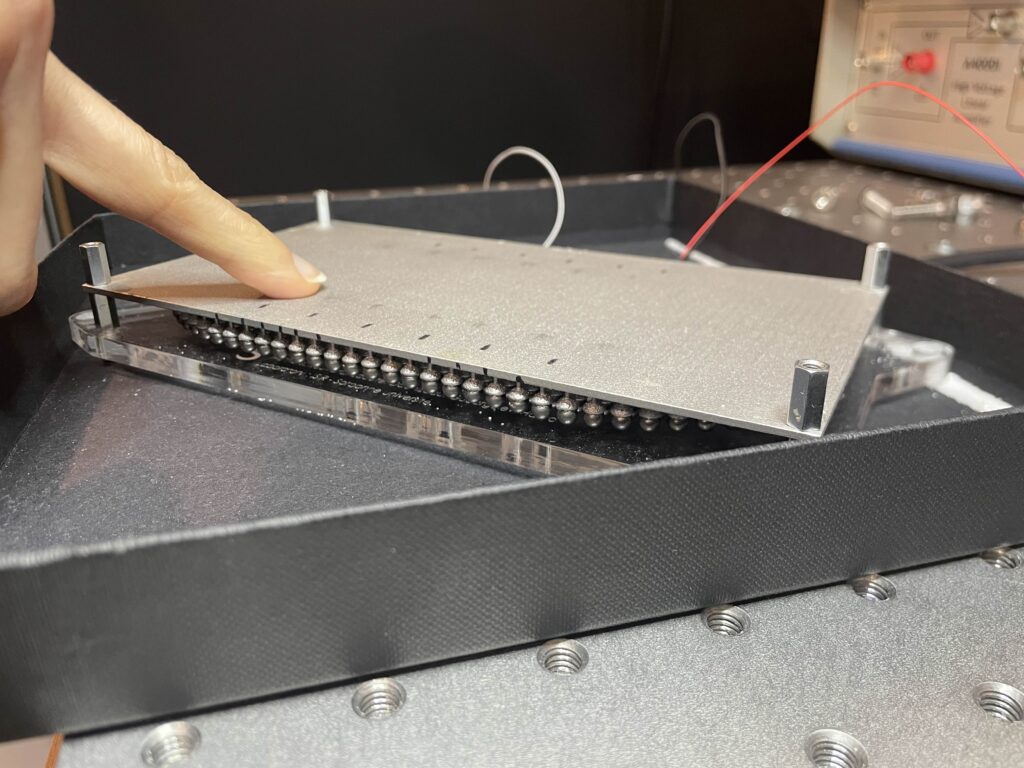

Scientifically, the project seeks to control the propagation of progressive ultrasonic waves on the surface using acoustic metamaterials. In other words, to be able to generate a net force on the finger without external actuation, but by making the surface resonate. To achieve this, we still need to understand the dimensions of our metamaterials in order to create a homogeneous force across the entire surface. We also need to study which haptic sensations will be most convincing in relation to a visual display and the position of the finger in real time.

The POC should enable users to feel buttons, edges, movements or guides on touchscreens. Ultimately, the project aims to develop industrialisable technology to provide a haptic solution for screen manufacturers.

The results

Initial experimental results have confirmed the possibility of generating progressive waves on a surface, creating a perceptible movement under the finger. The project must now extend and amplify this effect in a stable and efficient manner. The visuo-haptic demonstrator expected at the end of the project will enable multi-touch interactions: clicks, directional swipes, finger guidance, etc.

The main applications targeted are those in hospitals: surgical interfaces, monitors and imaging systems in the operating theatre, medical devices in intensive care, and robotic teleoperation interfaces.

In another context, certain situations also require a great deal of visual attention to the main task and are sensitive to distractions: this is the case with driving, where car dashboards consisting solely of touch screens could enable much safer interaction thanks to haptic feedback.

Overall, this technology aims to reduce the visual attention required for interface manipulation tasks by being easy to clean, improving the precision of gestures and enriching the user experience, including accessibility for people with disabilities.

Partnerships and collaborations

– Support programmes: PUI Alliance Sorbonne University – MyStartUp programme, i-PhD Bpifrance

– ISIR, CNRS Sciences Information Institute / CNRS Innovation for support with funding application

– SATT Lutech – Commercialisation, patents, maturation

– AP-HP – Innovation Hub & BOPEx third place, testing and co-design with healthcare personnel